What Bits Mean: Binary Integers and Two’s Complement

I was explaining two’s complement recently1 to a friend, and I thought my explanation was decent, so I decided to write it up and share it with you, my general blog audience, as well! If you already know about two’s complement, this will pretty much just be a review. If not, you may learn something, and you may not understand all of it. Try to get what you can without getting too anxious, there will not be a test!

In either case, feel free to ask questions in the comments or nit-pick any mistakes you see!

Storing Numbers in Binary#

So, without further ado, let’s talk about binary.

Computers store all information in binary, in terms of combinations of two values, conventionally called “zero” and “one.” It is easy to distinguish two values in a physical representation: the presence or absence of current on a wire, or of a radio signal; two areas magnetized in the same direction or opposite direction; a capacitor that is charged or not charged. Only having two possibilities for each storage location is just easier for computing circuitry to work with, and so all information in a computer is stored as patterns of two values.

All information in a computer is stored in binary, whether it be text, images, audio, video, scientific data, and even programs. Binary is meaningless without a convention for interpreting it. Today, we will talk about how numbers are stored in binary, specifically integers.

So how do we encode integers in binary? Let’s start out by assuming all the integers we might want to store are non-negative, and then we will discuss later how to accommodate negative numbers.

Computers encode integers similar to how humans do with a pen and paper,

by using a positional number system. When we read a number like 357,

we know that it contains 3 hundreds (3*10^2), 5 tens (3*10^1) and

7 ones (7*10^0), where ^ is used as the symbol for exponentiation.

Computers use a similar system, but with 2 playing the role of 10. Said another way, computers use base 2 instead of base 10. So, 1101 represents the number 13:

1101

1 * 2 ^ 3 + 1 * 2 ^ 2 + 0 * 2 ^ 1 + 1 * 2 ^ 0

1 * 8 + 1 * 4 + 0 * 2 + 1 * 1

8 + 4 + 0 + 1

13

Instead of (from right to left) seeing a ones place, tens place, and hundreds place, we have a ones place, a twos place, a fours place, and an eights place. It continues with increasing powers of 2 (again, right to left in the number): 1, 2, 4, 8, 16, 32, 64, 128, 256, 512, 1024, 2048, 4096, 8192, 16384, 32768, 65536…

When we write numbers in base 10, we write as many digits as it takes to store the number, and no more. 357 is a 3-digit number, but 5364 is a 4-digit number. But when computers store numbers in binary, they generally have a fixed amount of memory to work with, memory that is specifically designated to store this number. If the number can’t be stored in that many binary digits – that many bits – then, well, it can’t be stored in that amount of memory. Programmers in lower-level languages (like C++ or Rust) simply have to be careful that this situation doesn’t arise, either by ensuring no larger numbers will be stored, or else checking for situations where larger numbers would be stored, and either signalling an error or arranging an alternative storage arrangement.

Usually, a programming language would support 8-bit, 16-bit, 32-bit,

or 64-bit numbers. For unsigned numbers, these correspond to the types

uint8_t, uint16_t, uint32_t and uint64_t in C or C++, or u8,

u16, u32 and u64 in Rust. A type, by the way, is a particular way

of looking at a collection of bits in binary as a value, determining

both a mode of interpretation and a size of how many bits are included

in the value.

All the bits in the allocated space must be set to 0 or 1. So, if we

want to store the number 13 in an 8-bit number, we have to use 0s for

all the higher-order bits: 00001101 means the same as 1101, just as

000357 means the same thing as 357.

For the purposes of this document, however, let’s talk about 4-bit integers. 4 bits is half a byte or a nibble. Most processors do not have the capacity for directly interfacing with 4-bit integers – they only deal with 8 bits, or one byte, at a time. But you can still program with 4-bit numbers, you just have to do some extra work. And it makes it possible to show every possibility in this document. The lessons learned from it generalize to wider numbers.

Here are all the possible 4-bit integers and their decimal (base 10, normal human) equivalents:

0000 -> 0

0001 -> 1

0010 -> 2

0011 -> 3

0100 -> 4

0101 -> 5

0110 -> 6

0111 -> 7

1000 -> 8

1001 -> 9

1010 -> 10

1011 -> 11

1100 -> 12

1101 -> 13

1110 -> 14

1111 -> 15

Note that the maximum is one less than 2^4, which is 16. There

are 16 possible combinations of bits, but the maximum value is 15,

as one of the possible combinations is used to encode 0. All

integer types support storing 0.

So, how do we do addition? It’s through a very similar process to how

we do addition in base 10. We take the two numbers we want to add,

and line them up bit by bit. Let’s add 7 and 3, which we can see

from the table are 0111 and 0011. Let’s line them up in classic

grade school fashion:

0111

0011

Then, we can start adding. We start from the right, just as we did when

doing arithmetic in grade school. 1 + 1 is 2, which in base 2 is

10. So we write down the 0, and carry the 1:

1

0111

0011

----

0

OK, now 1 + 1, + the 1 we carried from the previous step, is 3,

which in base 2 is 11. We write down the (right) 1 and carry the

(left) 1:

11

0111

0011

----

10

Now, 1 + 0, + the 1 we carried from before, is 2, which is 10.

Keep the 0, carry the 1:

111

0111

0011

----

010

Final step, 0 + 0 + the 1 we carried is 1:

111

0111

0011

----

1010

This has 1 in the 8s place and 1 in the 2s place, and 0 in the

other places. 8+2=10, which is good because we were adding 7 and 3,

so 10 is the right answer. If we look in the table above, we see

that 1010 indeed corresponds to 10, so we know we’ve done

it right.

So that is how we add binary numbers.

Adding Numbers with Circuitry or Program Logic#

The addition table, as we can see, is very simple, so it can be represented in circuitry. For each bit, we have three inputs: one bit each from the two numbers, and the carry from the previous bit. We have two outputs, the bit we’re keeping, and the carry to pass on to the next bit.

We can create a complete table for this:

BIT A | BIT B | INPUT CARRY | OUTPUT CARRY | OUTPUT

0 | 0 | 0 | 0 | 0

0 | 0 | 1 | 0 | 1

0 | 1 | 0 | 0 | 1

0 | 1 | 1 | 1 | 0

1 | 0 | 0 | 0 | 1

1 | 0 | 1 | 1 | 0

1 | 1 | 0 | 1 | 0

1 | 1 | 1 | 1 | 1

Whenever we have a complete table of a limited number of inputs and outputs, it can be converted to a circuit. If we did so, and then wire a bunch of these circuits together, we could create a hardware adder. I will not go into how to do this in detail, as that is out of the scope of this post, but you can see how it would be simpler than encoding an entire addition table for base 10 in logic gates, which would have 200 entries instead of 8 (addition table with and without carried 1s).

I will, however, show you how simple it is conceptually by writing

a program to do it in Rust. In this program, the binary numbers are

represented as slices of bools, or true/false values. (A slice

is a region of memory with multiple values of the same type.) true

corresponds to 1, and 0 corresponds to false. In this program,

the slices start from the right (the least significant bit, the one’s

place), and go to the left (the most significant bit, the 2^N place) –

backwards from what you may be used to, but better suited for implementing

math like addition.

fn add(a: &[bool], b: &[bool]) -> Result<Vec<bool>, Error> {

// Keep track of carry

// 'mut' means it can change

let mut carry = false;

// Place to store the result

//

// A `Vec` lets us store a varying number of values of the

// same type. It's like a slice, but it can grow over time.

// `Vec::new()` gives us an empty `Vec`.

let mut res = Vec::new();

// Make sure we have the same number of bits in each input

if a.len() != b.len() {

// We don't? That's an error!

return Err(Error::MismatchedInputSizes);

}

// Go through each position

for i in 0..a.len() {

// Examine the corresponding input bits from each input nibble

// Also examine the carry from the previous step.

// The result will be the output and the new carry value.

//

// This corresponds to a "full adder" circuit, and in a hardware

// adder, one of these is used per bit.

let (output, new_carry) = match (a[i], b[i], carry) {

// We have a table of what possibilities there are

// for these input bits, and what two outputs to generate.

//

// In a circuit, this would be expressed through logic gates.

// All 8 possible combinations of 3 inputs are enumerated.

// 8 = 2 ^ 3

//

// This is a Rust representation of the table shown above.

(false, false, false) => (false, false),

(false, false, true) => (true, false),

(false, true, false) => (true, false),

(false, true, true) => (false, true),

(true, false, false) => (true, false),

(true, false, true) => (false, true),

(true, true, false) => (false, true),

(true, true, true) => (true, true),

};

carry = new_carry;

// This bit is added to our result

res.push(output);

}

// What if the result doesn't fit in the same number of bits?

// Because the highest order bits have a carry

// We'll write some guess temporarily for now, and

// discuss carries in more detail later.

if carry {

panic!("error message"); // ??? or something?

}

// We have successfully obtained a result!

Ok(res)

}

A full, runnable program is available on GitHub, as are the other examples from this post.

This program uses booleans as a stand-in for bits, with true standing

in for 1 and false for 0. It contains the table we created above,

but in the form of a match expression. It loops through the two values,

from least-significant bit (rightmost, 1’s place) to most-significant

bit (leftmost, 2^N place), bringing the carry output from each place

and using it as input in the next operation.

Ironically, actually running this program will actually use many more

than 4 bits for each number. It is designed to correspond conceptually

with the details of adding a 4-bit number. In practice, we’d use the

built-in u8 type and let the computer’s built-in addition circuitry

do it for us.

Overflow#

So, what happens if we have a carry on the last bit? If we’re adding two 4-bit numbers, and we’re storing the result in a 4-bit number, what happens if we add 10 and 10? The result won’t fit in a 4-bit number! You might assume (as my friend Ilse Purrenhage did) that there would be “an error message or something” (and therefore that’s what the Rust sample code does)!

Let’s see what happens in practice!

Here is a Rust program that overflows an 8-bit unsigned integer.

fn main() {

let mut integer: u8 = 255;

integer += 1;

println!("{integer}");

}

Here is a C program:

#include <stdint.h>

#include <stdio.h>

int main() {

// The highest 8-bit unsigned integer possible is 255,

// or 2^8 - 1, the highest number you can represent

// before you need a 2^8 or 256 place in binary.

uint8_t integer = 255;

// OK, so what happens if we add 1 to it?

integer += 1;

// Let's print it out and see!

printf("%u\n", integer);

}

Let’s start with the Rust program, as this is a Rust-focused blog.

[jim@palatinate:~/Writing/thecodedmessage-examples]$ cargo run --bin overflow

Compiling thecodedmessage-examples v0.1.0 (/home/jim/Writing/thecodedmessage-examples)

Finished dev [unoptimized + debuginfo] target(s) in 0.37s

Running `target/debug/overflow`

thread 'main' panicked at 'attempt to add with overflow', overflow.rs:3:5

stack backtrace:

0: rust_begin_unwind

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/std/src/panicking.rs:578:5

1: core::panicking::panic_fmt

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/core/src/panicking.rs:67:14

2: core::panicking::panic

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/core/src/panicking.rs:117:5

3: overflow::main

4: core::ops::function::FnOnce::call_once

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

Ah, looks like my friend Ilse was right, as this definitely qualifies

as “an error message or something.” It’s reasonable that Rust does

this! There’s no way to store 256 in a u8, so attempting to should

lead to an error.

But the experienced Rustaceans in the audience know there’s another

shoe that’s about to drop. We’ve finished developing overflow, and

we want to do a production release, so we run it in release mode, giving

the compiler more time to work on making the program run fast, and –

[jim@palatinate:~/Writing/thecodedmessage-examples]$ cargo run --release --bin overflow

Finished release [optimized] target(s) in 0.00s

Running `target/release/overflow`

0

Yes, that is indeed a 0 that was printed. 255 + 1 no longer results in an

error message. No, we now see that in debug mode, while we are still

developing the project, it results in an error. But once we switch to

release mode, we get a 0. And unfortunately, 0 is (checks notes)

not the sum of 255 + 1. This is really putting the “or something”

into “an error message or something!”

What is going on here? Alright, maybe this is one of the things they are complaining about when they say Rust is hard to learn. Let’s move on to C, the “easier” programming language –

[jim@palatinate:~/Writing/thecodedmessage-examples/src]$ cc -o overflow overflow.c

[jim@palatinate:~/Writing/thecodedmessage-examples/src]$ ./overflow

0

– in which it would also appear that, for 8-bit integers, 255 + 1 = 0.

So what exactly is going on? Well, let’s do out 255 + 1 in binary, using the good ol’ elementary school addition algorithm. We add 1 and 1, and get 10 (2), so carry the 1 and write down 0, which collides against the next 1, so carry the 1 and write down 0, until:

11111111 (carry bits)

11111111 (255)

00000001 (1)

---------

00000000

After doing all 8 bits, we have 8 bits of 0 as output, and still a 1

being carried to the 9th bit (also known as bit 8), the 256s place. Of

course, there is no 9th bit in the output, which is why we get an error

message or something when we run this. See our original binary.rs

program which does this algorithm out by hand using booleans:

[jim@palatinate:~/Writing/thecodedmessage-examples]$ cargo run --bin binary 00000001 11111111

Compiling thecodedmessage-examples v0.1.0 (/home/jim/Writing/thecodedmessage-examples)

Finished dev [unoptimized + debuginfo] target(s) in 0.41s

Running `target/debug/binary 00000001 11111111`

thread 'main' panicked at 'error message', src/binary.rs:64:9

stack backtrace:

0: rust_begin_unwind

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/std/src/panicking.rs:578:5

1: core::panicking::panic_fmt

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/core/src/panicking.rs:67:14

2: binary::add

at ./src/binary.rs:64:9

3: binary::main

at ./src/binary.rs:78:18

4: core::ops::function::FnOnce::call_once

at /rustc/90c541806f23a127002de5b4038be731ba1458ca/library/core/src/ops/function.rs:250:5

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

This was, if you remember from our example above, triggered because

the carry variable was still true after the entire loop!

// What if the result doesn't fit in the same number of bits?

// Because the highest order bits have a carry

if carry {

panic!("error message"); // ??? or something?

}

But what if we just remove that code?

[jim@palatinate:~/Writing/thecodedmessage-examples]$ cargo run --bin binary 00000001 11111111

Compiling thecodedmessage-examples v0.1.0 (/home/jim/Writing/thecodedmessage-examples)

Finished dev [unoptimized + debuginfo] target(s) in 0.39s

Running `target/debug/binary 00000001 11111111`

00000000

If we just ignore the last carry bit, instead of doing anything at all, we see that 1 + 255 does indeed equal 0. It equals 0, carrying a 1 into the 256s place, but if we just ignore that that carry might happen, it just equals 0. And that is what C does. And if we are asking Rust to optimize for performance rather than debuggability, that is also what Rust does.

In fact, this is exactly the behavior that the C standard explicitly requires for unsigned values. Code can be written that relies on this behavior.

So what exactly is happening here? How can we understand 255 + 1 = 0? Well, we are ignoring a carry, and ignoring places above the 128ths place. This creates a special form of math, where instead of increasing, at a certain point numbers wrap around, like an old-fashioned odometer going from 999999 to 000000, or a 24-hour clock going from 23:59 to 00:00, or the day of the month going from 31 to 1.

Modular Arithmetic#

This is known in mathematics as modular arithmetic, which is an important part of number theory, or the study of whole numbers and integers (as opposed to rationals and reals, where modular arithmetic would make little sense). In modular arithmetic, we treat numbers as if they are equal – some call it congruent – if they have the same remainder when divided by a number. The number we divide by can vary – it can be, for example, 2, 10, 24… or 256.

For example, 24-hour time works modulo 24. 23 (or 11PM) + 1 hour yields 0 (or midnight). 22 (or 10PM) + 4 hours yields 2 (or 2AM).

We can also do modular arithmetic modulo 10 by considering decimal

numbers and only paying attention to the one’s place. 3 * 5 = 15,

but we are only paying attention to the one’s place, so we say that

3 * 5 = 5 modulo 10.

One property of modular arithmetic is that negation works weirdly. When working modulo 10, 9 also serves as -1. They are equivalent modulo 10, because they are a multiple of 10 apart. It works functionally: 6+9=5 modulo 10, or 1+9=0 modulo 10. Adding 9 is the same as subtracting 1, so we can say that 9 is congruent to -1.

Well, in C, and in Rust with debugging turned off, arithmetic on a u8,

or any unsigned integer type, wraps around modulo the maximum value

plus 1. For 4-bit integers, this would be 1111 + 1 in binary (or 15 +

1 in decimal), or 16. For 8-bit integers, it’s 256.

This is a natural consequence of ignoring the final output of the carry bit. Just like ignoring digits above the 1s place yields modulo-10 arithmetic, ignoring bits above the 8s place yields modulo-16 arithmetic.

It does mean that, when we want to subtract 1 from a u8, we can instead

add 0 - 1, which is 256 - 1, which is 255. When we want to subtract 2,

we can instead add 0 - 2, aka 256 - 2, aka 254.

Two’s Complement#

In fact, this is how computers implement subtraction in circuitry – and not just how we implement subtraction, but also negative numbers. To negate a number, instead of counting up from 0, we count down from 0, but with wrap-around.

Let’s return to our 4-bit example, as that’s easier to work with. Each combination of bits can be interpreted as a negative number, or a positive number.

Bit Pattern | As Positive | As Negative

0000 | 0 | -16

0001 | 1 | -15

0010 | 2 | -14

0011 | 3 | -13

0100 | 4 | -12

0101 | 5 | -11

0110 | 6 | -10

0111 | 7 | -9

1000 | 8 | -8

1001 | 9 | -7

1010 | 10 | -6

1011 | 11 | -5

1100 | 12 | -4

1101 | 13 | -3

1110 | 14 | -2

1111 | 15 | -1

And, of course, it just wraps around after: 1111 + 1 (15 + 1) = 0000 (with a carry beyond the highest order digit).

For most operations, it doesn’t matter whether we interpret the number as positive or negative. Addition, subtraction, multiplication, and division will all wrap around. The computer just cares about the pattern of bits, and applies the circuitry to it.

Of course, if we want to display the number to the user, it matters. We don’t want the user to enter -1 and the computer to randomly display 15 later (or 255 for 8-bit, or 65535 for 16-bit, or 4294967295 for 32-bit). Somehow, we need some mechanism of deciding when we want to interpret the number as -1, and when we want to interpret it as 15.

Similary, comparisons. -1 is less than 1. 15 is greater than 1. Sign matters. Where does it wrap around? For what N is N + 1 < N?

It’s an arbitrary cut off. And there’s two conventions.

For one of the conventions, unsigned integers, well, it’s always positive. The N for which N + 1 < N is 15 (for 4 bit arithmetic), as 15 + 1 wraps around to 0. 15 > 1. 0 < 1. Subtract 0 - 1 and display it? You get 15.

The following program displays 15:

#include <stdio.h>

int main() {

struct {

unsigned four_bits: 4;

} bitfield;

bitfield.four_bits = 0; // Starts out with 0000

bitfield.four_bits -= 1; // Modify field by subtracting 1

printf("%u\n", bitfield.four_bits); // Display on screen

}

See the word unsigned there in the declaration of four_bits?

That’s the unsigned convention for arithmetic.

Well, the opposite of that is signed integers. For these, the numbers that are interpreted as negative are the numbers for which the highest-order bit is a 1. The cut-off N, for which N + 1 < N, is 7. 7 + 1 = -8. 7 + 1 < 7.

Here’s our original table with the standard signed interpretations left in:

Bit Pattern | As Positive | As Negative

0000 | 0 |

0001 | 1 |

0010 | 2 |

0011 | 3 |

0100 | 4 |

0101 | 5 |

0110 | 6 |

0111 | 7 |

1000 | | -8

1001 | | -7

1010 | | -6

1011 | | -5

1100 | | -4

1101 | | -3

1110 | | -2

1111 | | -1

For another way of looking at it, remember we discussed

that these bits were coefficients to powers of two.

0101 is 5 because it’s 0 * 2^3 + 1 * 2^2 + 0 * 2^1 + 1 * 2^0.

It has 1s in the 4s place and the 1s place, so it has 1 4, and 1

1, and nothing else.

Well, what if instead of that higher-order bit being the 8s place,

it were the -8s place? The other bits remain positive in value.

8 and -8 are equivalent modulo 16, so this doesn’t actually

change the meaning of the bit in the modular sense. But it does

change the meaning of the > and < operations.

0101 is still 5, but it’s 0 * (-8) + 1 * 4 + 0 * 2 + 1 * 1 now.

And 1111 is -1, because it’s 1 * (-8) + 1 * 4 + 1 * 2 + 1 * 1,

or -8 + 4 + 2 + 1 or -8 + 7 or -1.

I’m not making this needlessly complicated! If it is needlessly complicated, it’s not me who’s making it that way! This is how computers actually work, because it makes sense that way, from a circuitry design perspective and from an engineering perspective.

OK, now for some fun facts!

This way of storing signed integers in computers is known as two’s complement.

Two’s complement negation can be computed by inverting all the bits and adding 1. This is opposed to 1’s complement, where we negate by inverting all the bits, and the circuitry is annoying and stupid. It is also opposed to sign/magnitude, where the top bit indicates sign by negating the whole rest of the number if it is 1, rather than subtracting a value (e.g. 8 in 4-bit integers, or 128 in 8-bits).

Almost all computers use two’s complement to store integers these days, for all the reasons discussed above. For non-integers, all bets are off, but sign-magnitude is popular for floating point numbers overall.

In two’s complement, -1 is always represented as all bits 1. Why? Well, for the same reason 10,000 - 1 is 9,999, and 100,000 - 1 is 99,999.

In fact, if we start by 1 followed by many zeros in any base, and

we subtract 1, then we get the maximum digit of that base repeated.

If we’re in base 8, 1000 - 1 = 777. If in base 16, then 100 - 1 = FF

(in base 16, it is conventional to use the letters A-F to represent the

digits for 10-15).

Why is this? Well, doing the subtraction out, right to left, we start

with 10-1, with a borrowed 1. The value one less than the base is

the greatest digit of that base: 1 less than 10 is 9. Then, to get that

borrowed 1, we must go to the next digit to the left, and subtract that

1. But that digit is also a 0, so we must borrow a 1 even further

out, so we get 10-1 again. This continues until we get an actual 1

and can no longer borrow.

Of course, when subtracting from 0 in a computer context, you can always borrow past the left-hand side. 0000 and 10000 are the same bottom four bits; they are congruent modulo 16. Borrowing a 1 from off the end is always an option.

Or, considered another way, if you add binary 1 to 1111, you will get 10000, for the same reason that if you add 1 to 9,999, you will get 10,000. That last bit falls off the end, though, so 1111 is just -1, because 1111 + 1 = 0.

This also means that to negate a number N, you can:

- Invert all the bits. This gives you -1 - N.

- Add 1. This gives you -N.

Let’s do this one step at a time.

Invert all the bits. This gives you -1 - N. Let’s figure out how to do -1 - N, and we’ll see it works out to inverting all the bits. So, -1 has all bits 1, so no bit will need to be borrowed. If you subtract a 1 (from the N) from the 1 (from -1), it becomes a 0. If you subtract a 0 (from the N) from the 1 (from the -1), it becomes a 1.

Okay, perhaps it’s better to demonstrate visually. This is -1-5, as a subtraction problem in binary:

1111 (-1 aka 15)

- 0101 ( 5)

----

1010 (-6 aka 10)

By subtracting 1111 - 0101, we got 1010, the bit-inversion of

0101. Said another way, by inverting all the bits in 0101,

we got 1111 - 0101. Said another way, by inverting all the bits

in 5, we got -6.

- Add 1. This gives you -N.

And that is what computers do with signed integers when you

have an integer n and write let negate_n = -n; – the computer

provides circuitry internally that lets you invert all the

bits and then add one.

So, now we know how to represent numbers as signed and unsigned. But we also treat them as equivalent. That’s confusing. So what’s up with that?

For some operations, this representation makes it so you don’t need to care about signed and unsigned. These include addition, subtraction, and multiplication. As long as you assume that wrapping-around doesn’t happen (which is the faster, more efficient implementation in circuitry), these operations literally do the same thing in signed and unsigned. One person’s overflow is another person’s subtraction, but as long as we’re OK with overflow, subtraction is cool too.

Ironically, negation goes in this category. It doesn’t need to care which numbers you interpret as positive or negative to give you a number -N that, when you add it, undoes the effect of adding N.

However, if you want to do checked versions of addition, subtraction, and multiplication, where the program notices when adding two positive numbers results in a number smaller than both, and causes a trap, a stop in the program’s normal behavior, “an error message or something,” then how that check works differs in signed and unsigned.

This check would involve a version of the addition operation that actually used the carry output from the last bit. But there’s two interpretations of that. Should -1 + 1 trigger? In unsigned arithmetic, where it’s actually 15 + 1 (or 255 + 1, or 65,535 + 1), it probably should, because wrapping around to 0 is a trap. But in signed arithmetic, -1 + 1 is not an overflow at all.

So, in unsigned arithmetic, the normal carry output of the leftmost (most significant) adder circuit can be used. Given the leftmost input bit of the first argument, the leftmost input bit of the second argument, and the carry from the next adder circuit to the right (the second-most-significant bit, if you will), if 2 or more of those bits are 1, well, then, signal an overflow.

For signed arithmetic, it’s about how the sign bits line up in the input and the output.

Fun aside: The sign bit is another name in two’s complement for the most significant bit, as it is 1 if and only if the number is negative. Some overzealous teachers will say it’s not a sign bit because its significance can be ignored in many operations, but that’s even more obnoxiously pedantic than saying that there’s no such thing as centrifugal force, and more inaccurate. Every processor designer (including Intel and ARM) refer to this bit as the sign bit, which makes sense because it tells you what sign the number is.

If the two input sign bits are the same in an addition, and the result

bit is different, then you have a signed overflow. For example,

1111+1111=1110 is fine, as that’s -1 + -1 = -2. Similarly,

1111+0010=0001 is fine, as that’s -1 + 2 = 1. But 1000+1000=0000

is weird, as that’s -8 + -8 = 0. The two input sign bits are both 1

in an addition, and the output sign bit is 1 – suspicious.

So the situation where unsigned integers wrap around in defiance of normal integer math is known as “carry” on Intel, and is indicated by a “carry flag,” which can be checked to output an error message or something. Similarly, the situation where signed integers wrap around is known on Intel as “overflow,” which is indicated by an overflow flag.

The other flag is always meaningless. Which flags you check on Intel is an indication of whether you are doing unsigned or signed (i.e. two’s complement) arithmetic. And, since operations like less than and greater than are also implemented on Intel by checking flags, literally the only difference on Intel between signed and unsigned arithmetic is what flags you check.

Fun fact: Comparisons on Intel are done by the

cmpinstruction, which does a subtraction, throws away the result, and sets the flags accordingly. The flags can then be inspected to determine which input was greater or less, or less-or-equal or greater-or-equal, with either signed or unsigned semantics. All the same flags are set with a normal subtraction.

By the way, every time you add or subtract on Intel, both carry and overflow flags are set accordingly. It’s easier in circuitry to just do both, the operations are so similar.

You can read more on Intel’s flags here.

Summary#

Collections of bits can be used to store integers, using base 2. Addition, subtraction, and multiplication are implemented in such a way that wraps around, and any number can have a positive or negative interpretation. This weird type of math is called modular arithmetic.

If we interpret all the numbers as positive, then we are doing unsigned arithmetic. Programming languages represent this by referring to unsigned integers, but to the processor, they’re all just integers. The question is just what kind of arithmetic we’ll do. With this interpretation, adding two numbers and getting a smaller one is overflow, and subtracting from a number and making it bigger is called underflow. This may or may not be detected, but if it is, it is done on Intel by checking the carry flag. Besides that, it will happily do the arithmetic in a modular way. Less than and greater than are also evaluated via the carry bit.

If we interpret some of the numbers as negative, it’s based on the top-most bit, the sign bit. This is known as signed arithmetic, and programming languages will use this for signed integers. Numbers are still interpreted based on their negative meaning in modular arithmetic. In this interpretation, adding two negative numbers and getting a positive, or adding two positive numbers and getting a negative, is known as overflow, and it shows up in the overflow flag.

In either case, Intel processors perform addition, subtraction, multiplication, and negation the exact same way for signed and unsigned arithmetic. The only difference is the flag, and Intel will always do the work to set or clear both flags appropriately. To distinguish between signed and unsigned arithmetic, programming languages check the specific flag they’re interested in for appropriate operations, like less than and greater than operations.

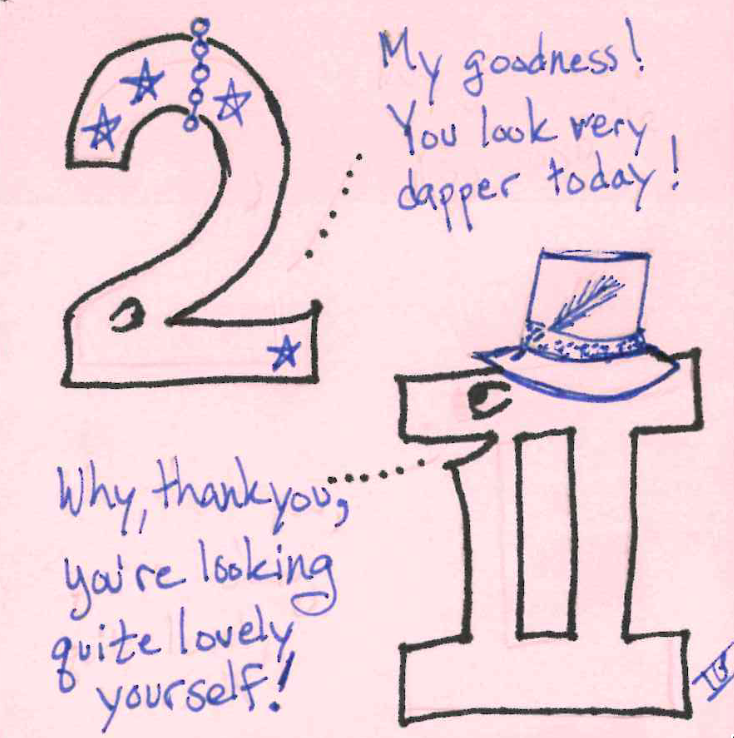

Two’s complement is not to be confused with “twos compliment” (drawing by my friend Ilse Purrenhage):

-

This word is relative. ↩︎

Subscribe

Find out via e-mail when I make new posts! You can also use RSS (RSS for technical posts only) to subscribe!

Comments

If you want to send me something privately and anonymously, you can use my admonymous to admonish (or praise) me anonymously.

comments powered by Disqus